Happy young european man bragging about the big size of something. Studio shot on pink background.

The LiDAR wars are heating up. Not surprising since 6 companies have gone public in the past 6 months and become LUnicorns via SPAC (Special Purpose Acquisition Companies) mergers. Billion dollar valuations, the pressure of reporting quarterly losses, and the need to boost their stock prices has led essentially to a LiDAR range war. Specifically, how far can the LiDAR “see” and recognize cars, pedestrians, animals, hazards, road debris, etc.

Eye safety considerations limit LiDARs at 8XX-9XX nm to lower ranges than at 14XX-15XX nm. The latter can use higher laser power because the human cornea absorbs light at this wavelength, limiting damage to the retina. Higher wavelengths are expensive (2-3X relative to 8XX-9XX nm systems). Companies operating at these wavelengths need to justify the higher LiDAR cost, and range performance seems to be an important argument at this point. Princeton Lightwave and Luminar were the first to announce and demonstrate 200-300 m ranges with their 15XX nm automotive LiDAR. Aeva announced recently that their 15XX nm Frequency Modulated Continuous Wave (FMCW) LiDAR can detect cars at 500 m and pedestrians at 350 m. Prior to this, Aeye advertised a 1000 m range for detection of cars. Not to be outdone, Argo announced recently that its Geiger Mode LiDAR operating at > 1400 nm wavelength has a range of 400 m (Argo acquired Princeton Lightwave in 2017). Argo claims that its LiDAR allows it to detect cars with 1% reflectivity at night (the night statement is a bit confusing since for Geiger Mode, the more challenging situation is in bright sunshine). It is not clear whether the 400 m range is achievable in bright sunlight with a 1% reflective object (that would be pretty groundbreaking !), and whether apart from detection, object recognition is also possible under these conditions.

LiDAR range is important for L4 autonomous vehicles (AVs), but the specification is nuanced. For safety critical obstacle avoidance, the AV perception engine needs to recognize road hazards in adequate time to enable safety maneuvers like braking to avoid tire debris. What matters is the range for a particular object reflectivity (10% seems to be a reasonable standard) and a high confidence level (> 99%) of the hazard recognition (otherwise, the false alarm rate would be very high, causing constant braking and leading to passenger discomfort and complaints). It is worth noting that there is a difference between detection (”something is out there but we don’t know what it is”) and recognition (”the something out there is a stalled car or a pedestrian”). Too often, range numbers are thrown around that relate to detection, which is generally not actionable. Recognition is a more difficult problem that relies on the resolution (see below) and the accuracy of real time image processing.

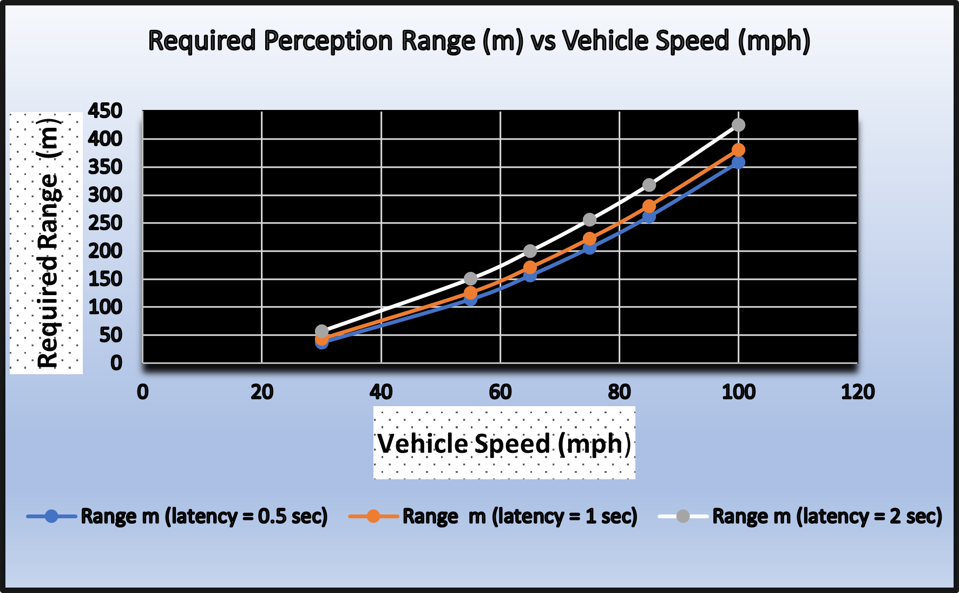

AVs use their sensors, perception engines and artificial intelligence to apply five basic control actions: brake, steer, accelerate , decelerate or park. By far, the most critical and time/safety sensitive action is braking when obstacles emerge. The braking distance (distance required to reduce velocity to zero) is a function of the initial vehicle speed and a safe/comfortable deceleration (typically 0.3g or about 3 m/s2). The required perception range is a function of the vehicle velocity and the latency. Latency is the time interval between acquiring raw sensor data and applying the safety action (in this case, braking), and impacts the required range because the vehicle continues to move during this period. Higher latency demands lower operating vehicle speeds or higher range. Figure 1 shows the relationship between these three quantities.

Figure 1: Required Perception Range for Obstacle Avoidance (Braking at 0.3g)

How much range is enough, and what are the implications of promising ever higher ranges ? It is unlikely that L4 vehicles will operate above 60-70 mph on highways in the near future (5-10 years?). At these speeds, a range of about 200-250m would appear to be adequate. Heavy trucks need lower deceleration levels during braking, higher ranges. However, lower velocities (55-60 mph) ensure that a 200-250m range is adequate. Weather is another factor to consider - deceleration levels in bad weather are lower, but so are vehicle speeds, making a the 200-250m range a reasonable operating target.

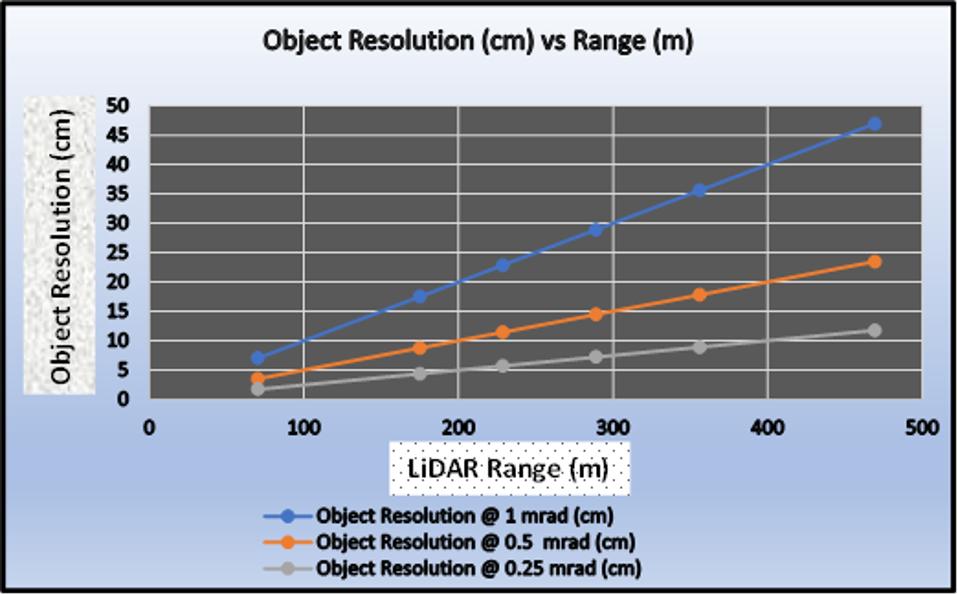

Attaining higher object recognition range is expensive, since more LiDAR resources need to be expended (laser power, more sensitive detection, higher power consumption, etc.). There are arguments for pushing the limit, such as added safety margins and certain use cases (for example, bad roads, bad weather, etc.). But the downside to pushing this performance metric are compromises in other parameters like object resolution, as shown in Figure 2.

Figure 2: Object Resolution Degradation with Increased Range

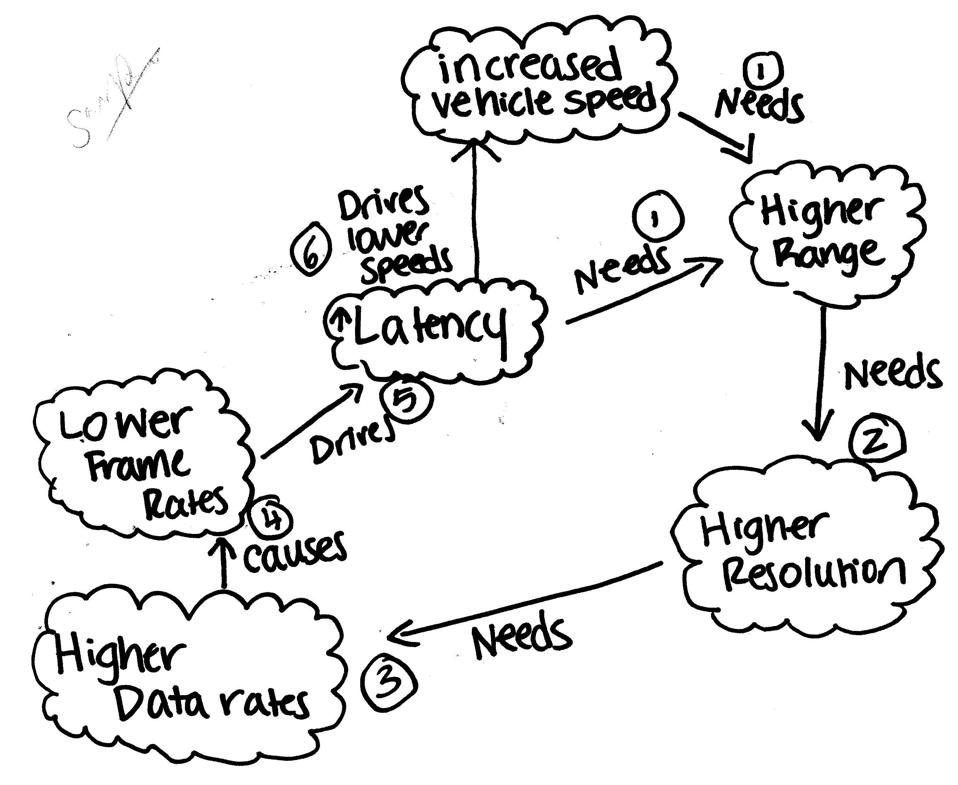

Object resolution dictates the confidence level for recognition, crucial for perception and control actions in autonomous cars. Detecting and recognizing tire debris or a brick (typically 6” or 15 cm) typically requires a resolution of 8 cm (at least 2 pixels are required for recognition, 3 is ideal). Doubling the range from 200m to 400m requires the angular resolution to improve from 0.5 mrad to 0.25 mrad, increasing the amount of raw data and compute bandwidth by 2X. This results in higher optics and compute costs. It also increases the latency, requiring higher perception range or a reduction in vehicle speed (see Figure 1). Hopefully, Figure 3 articulates this circularity more clearly.

Figure 3: The Range-Resolution-Rate Conundrum: 1) High Speeds & Latency Need Higher Range which 2) ... [+]

Promising ever increasing range is not a recipe for superior LiDARs. Use cases and inter-related system specifications like resolution, frame rate and latency need to be considered. The exact conditions and definitions for achieving longer ranges must be clarified (reflectivity, confidence levels, latency, lighting conditions, angular resolution, etc.), and the perception function achieved at this range (detection, recognition, identification) needs to be clearly stated. As LiDARs become critical safety sensors, they need to mature in terms of universally accepted standards and specifications.